Computer Graphics Independent Study, Spring 2016

Exploring pbrt with Lightcuts

Chris Butler

Nico Pampe

What is pbrt?

For our study we followed along with the second edition of Physically Based Rendering: From Theory to Implementation, often abbreviated as pbrt. The authors have a wonderful website dedicated to the book and related software here. The 3rd edition of the book is expected to be released in later 2016.

The book walks through the design and implementation of an offline, ray-tracing rendering framework called pbrt. We read through most of the book and made minor modifications to the provided base code.

Although the third edition of the book is not out yet, the code which it describes is available and was our version of choice. It offered a number of improvements including the use of the CMake build system. We used the third edition code when possible, but reverted to the second edition code for the final paper due to technical limitations.

The pbrt code is available on GitHub. Our modifications are available as forked repos on GitHub. pbrt-v2 and pbrt-v3

Lightcuts Overview

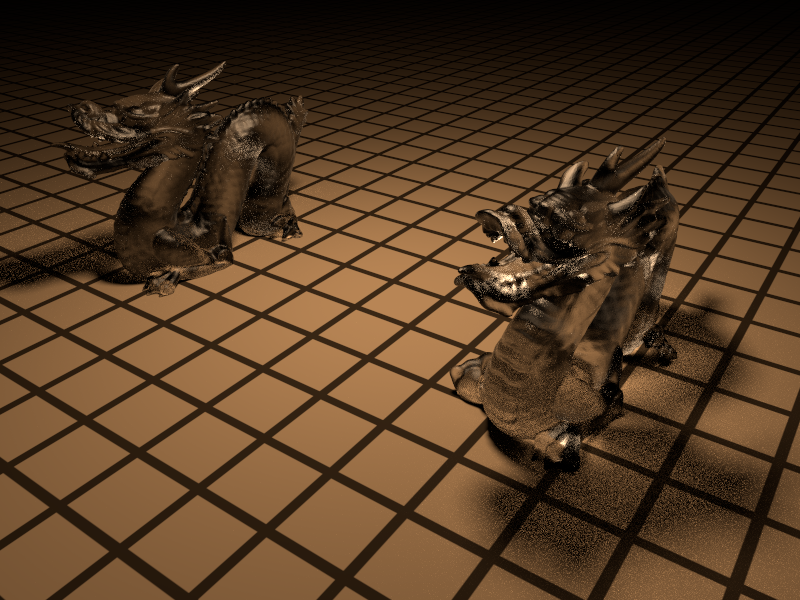

Our study concluded with a focus on making a larger, more substantial change to pbrt. We chose to implement the "Lightcuts" algorithm, which accelerates global illumination. Our work is based off of the paper Lightcuts: A Scalable Approach to Illumination, Walter 2005.

Global illumination is the process of lighting a scene while casting shadows, reflections, and otherwise allowing objects from anywhere in the scene to influence the end result. This is in contrast to local illumination, where only the immediate geometry, often the size of a pixel, and the properties of light are considered.

The lightcuts algorithm works off of the concept of virtual lights. Many, many point, directional, and spot-lights are placed in the scene to emulate area, environment, and reflective lights. The details of how this is done is beyond the scope of our work. Interested readers should consult Keller's paper 1997 on Instant Radiosity from SIGGRAPH. (slides)

After virtual lights have been distributed across the scene, the lightcuts framework kicks in. A binary light tree for each type of light (point, directional, and spotlight) is constructed based off of physical proximity in the scene and properties of the lights such as intensity. Later, when sampling rays into the scene, these trees provide improved lookup time. Lightcuts improved this scenario by introducing cuts into the tree for each point being lit. Lights are combined into clusters with lights of similar properties. This can save many thousands of lookups and significantly improves render time by allowing the sampler to get a close enough summary of a cluster. This framework has provably tight error bounds, so in practice errors do not manifest in the image.

Goals

We had five primary goals for this study.

- Produce a paper on an advanced topic in graphics

- Gain experience in an unstructured, independent study course

- Learn about the algorithms used in pbrt

- Understand the software engineering design principles used by pbrt

- Learn how to use pbrt to render complex scenes

Results

We had mixed results with our goals.

The first goal, to produce a paper on a subject in depth, was mostly met. We studied the Lightcuts framework and made an attempt to implement it ourselves. This attempt, however, was not successful. We found the learning curve for working on a large code base such as pbrt to be steeper than anticipated. We got as far as constructing a tree from virtual lights, but were unable to use that tree to improve rendering performance.

Goals 3, 4, and 5 were fairly straight forward. We spent a great deal of time working with both versions of pbrt and learned quite a bit about each and their differences. Toward the end of the semester, we found that the third version did not implement VirtualLights, and so were required to switch to the second version. This hiccup cost us a lot of time and energy, but we learned a valuable lesson about scoping out the code base first.

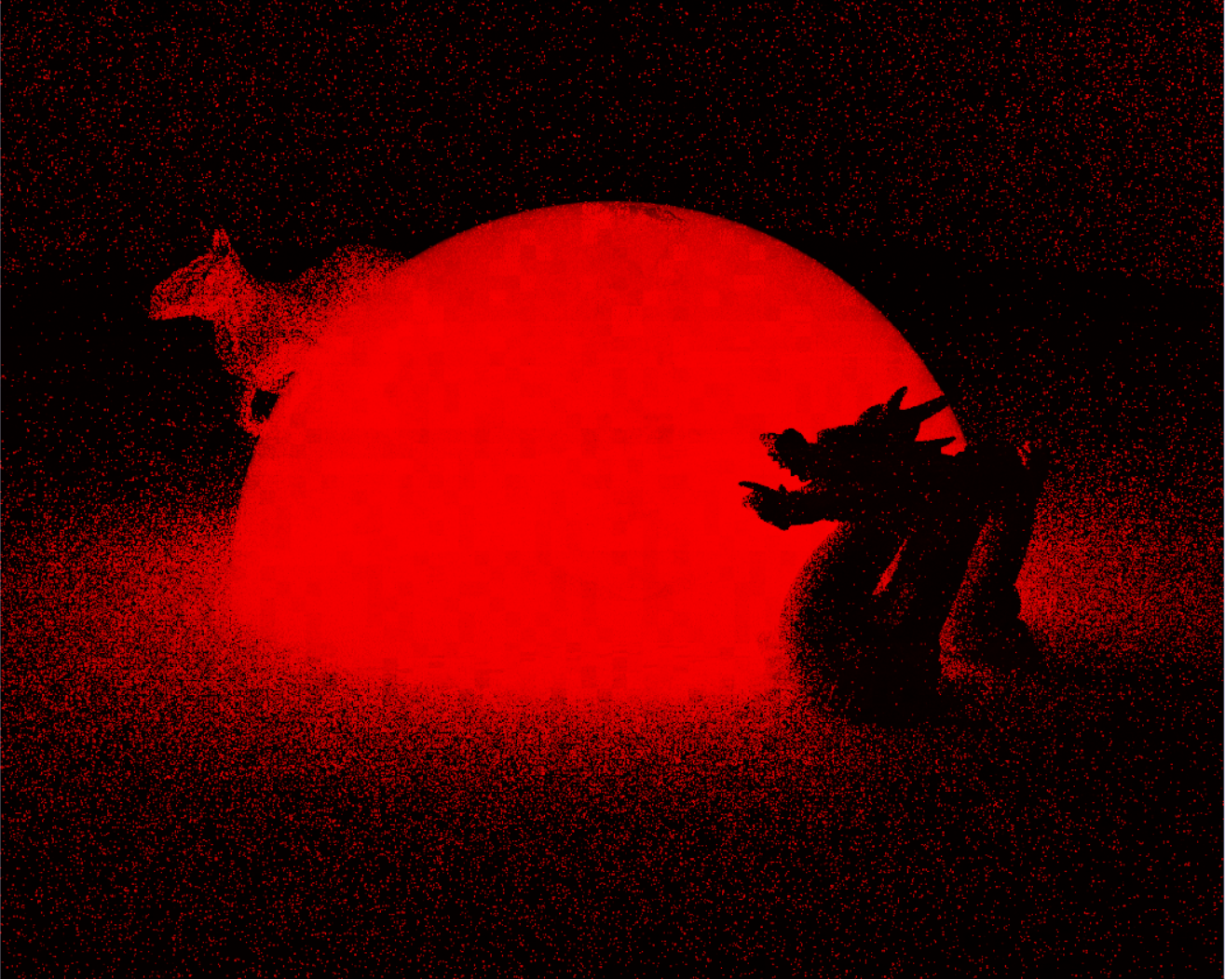

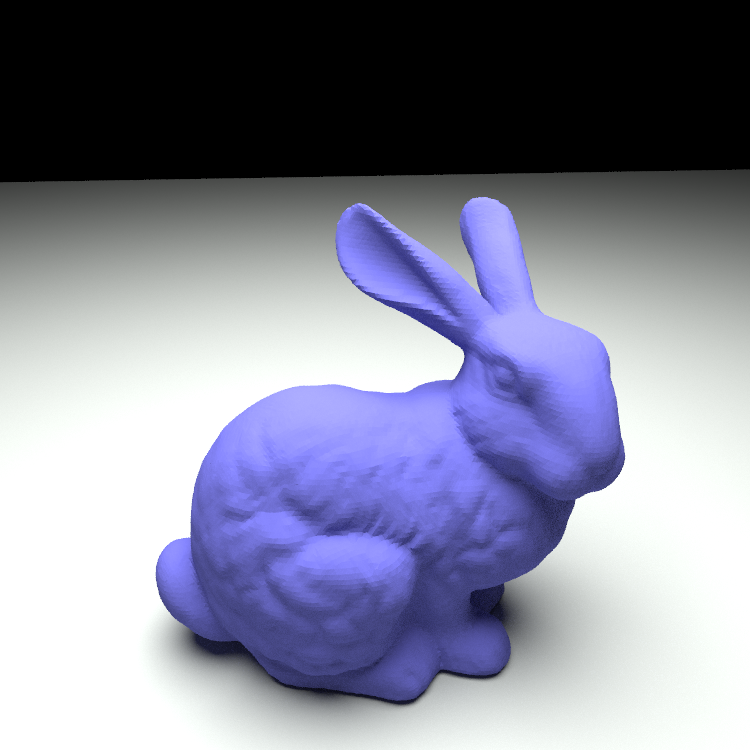

We were able to implement part of the lightcuts algorithm. We identified where pbrt constructs virtual lights and were able to construct a binary tree out of these. We were not able to construct the tree to all of the original specification of the algorithm, however; For example, we did not have clusters properly identify a representative light, only their cumulative intensity. We were able to "pretty print" the resulting tree of light intensities. Here is a small example from the above bunny image scene. Several settings were reduced when generating this output, so the scene was not quite as smooth as above.

Cluster (10.8, 11.5, 10.8)| Cluster (5.9, 6.2, 5.9)

| | Cluster (1.9, 2.0, 1.9)

| | | Leaf (0.9, 0.9, 0.9)

| | | Leaf (1.0, 1.1, 1.0)

| | Cluster (4.0, 4.2, 4.0)

| | | Leaf (1.8, 1.9, 1.8)

| | | Leaf (2.2, 2.3, 2.2)

| Cluster (5.0, 5.3, 5.0)

| | Cluster (2.1, 2.3, 2.1)

| | | Leaf (1.7, 1.9, 1.7)

| | | Leaf (0.3, 0.3, 0.3)

| | Cluster (2.9, 3.0, 2.9)

| | | Leaf (2.0, 2.1, 2.0)

| | | Leaf (0.9, 1.0, 0.9)

Overall we found the experience very challenging. It was quite unique compared to our usual classes, and we are thankful for the learning experience.

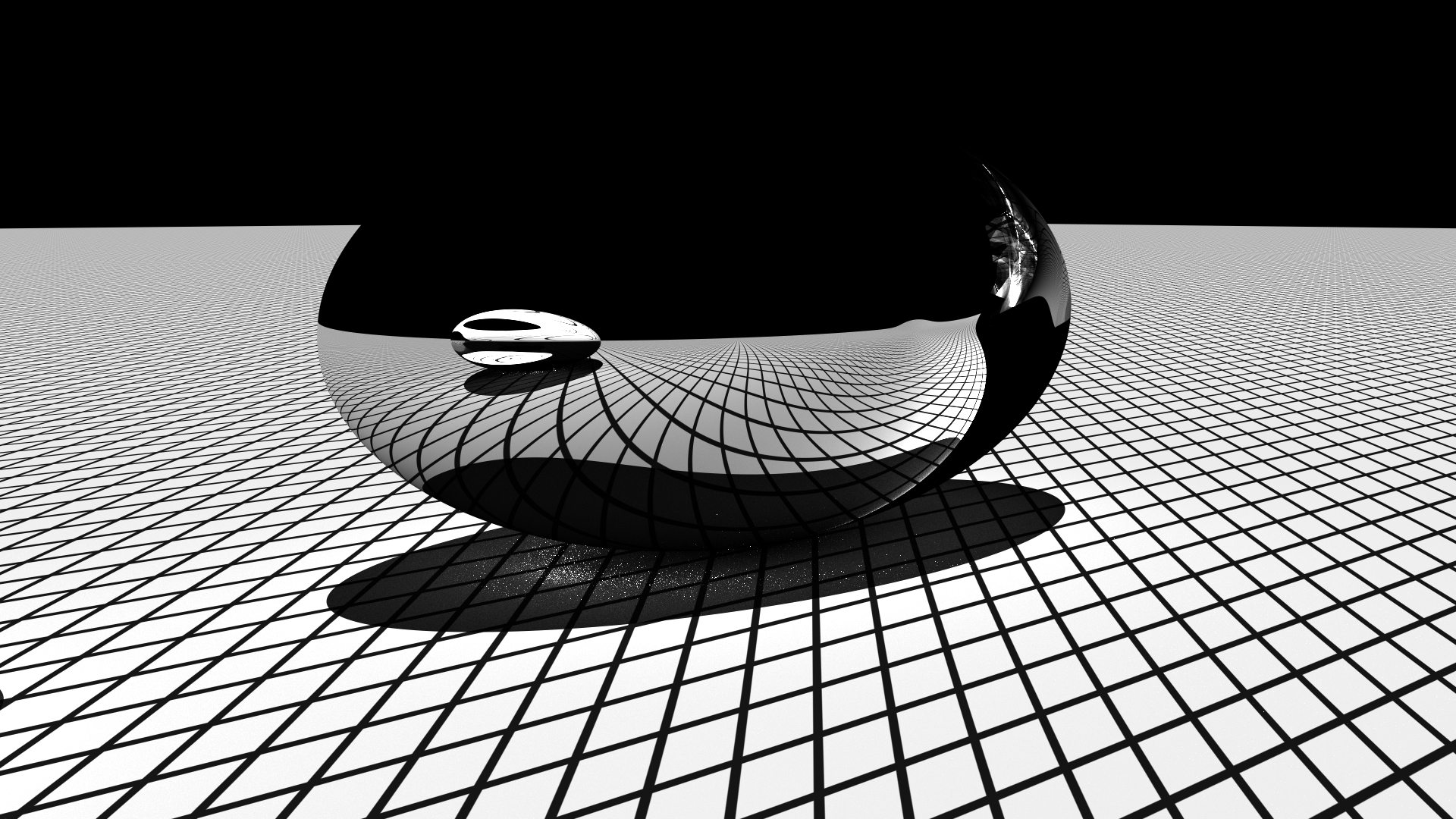

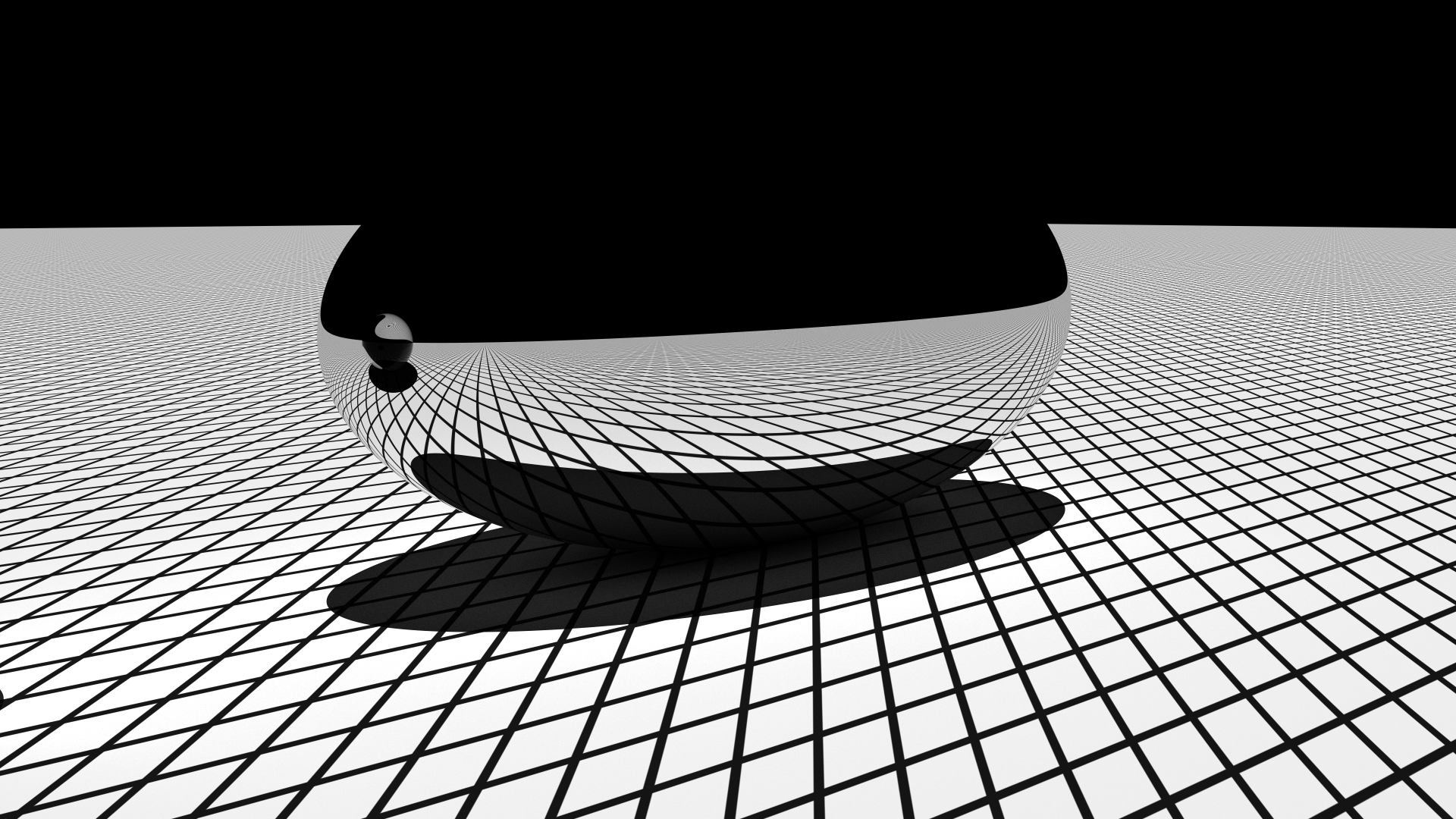

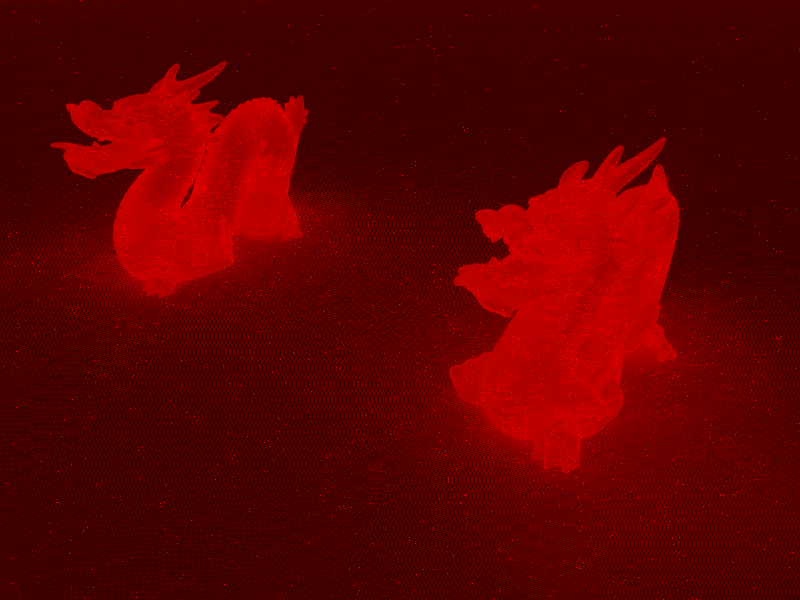

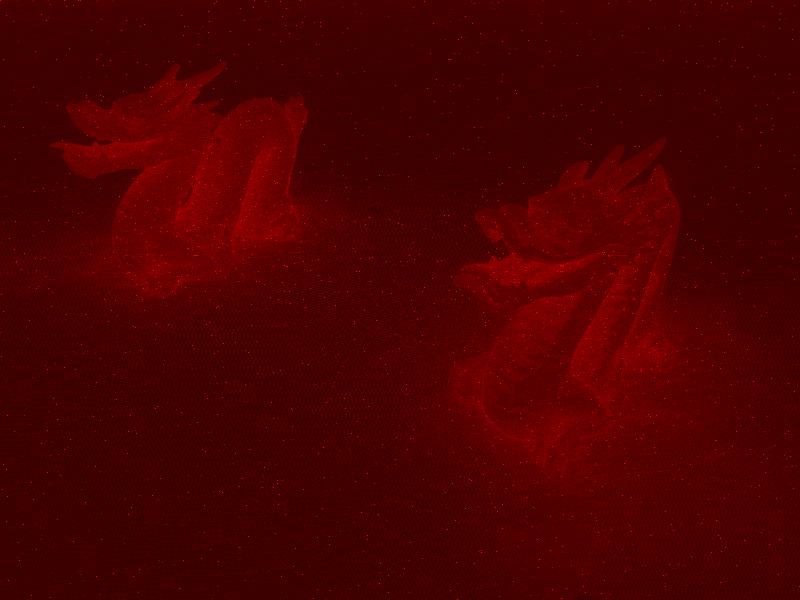

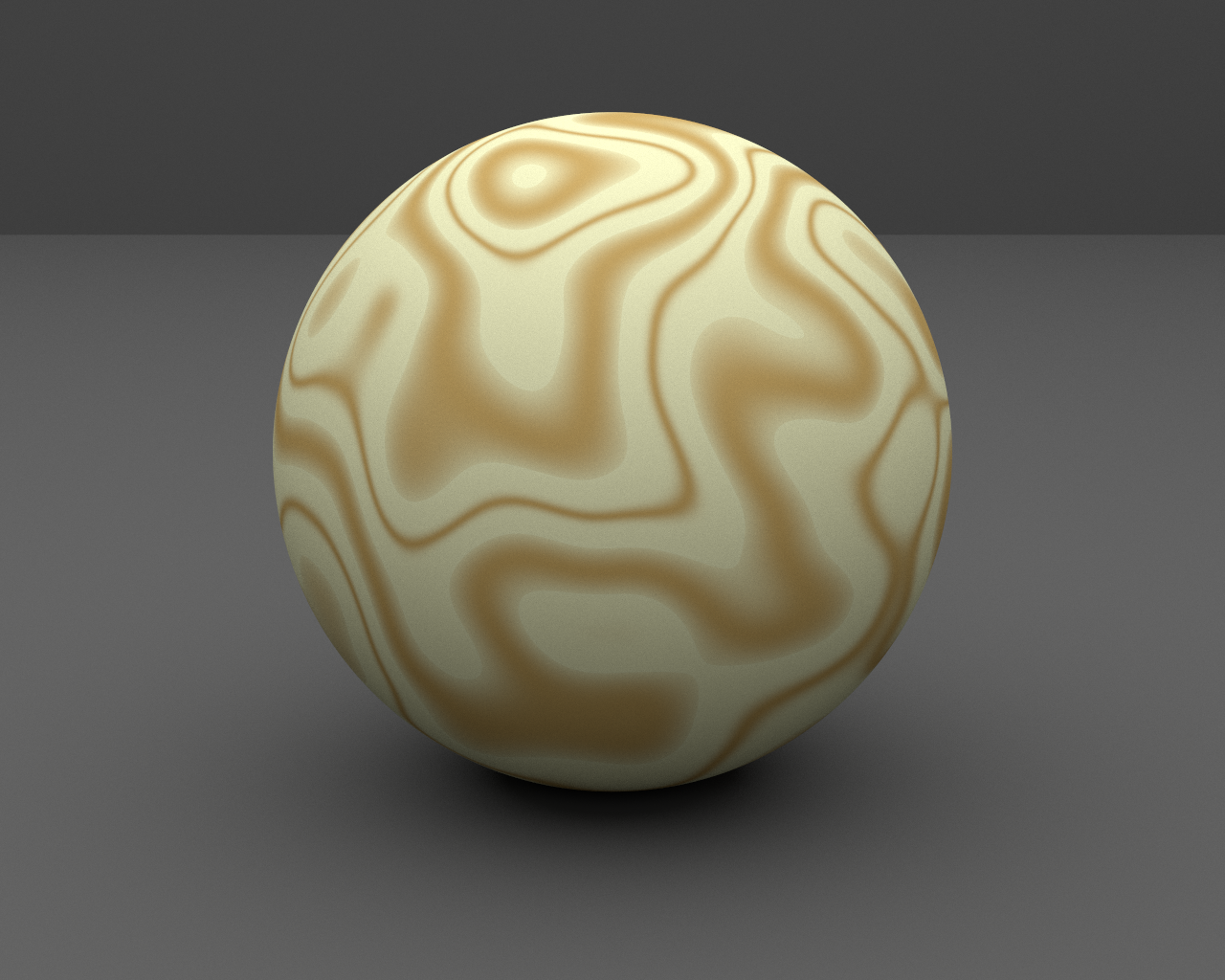

Image Gallery